Artificial intelligence (AI) is a rapidly evolving field that encompasses a wide range of technologies and applications, from machine learning and deep learning to natural language processing and computer vision. As AI continues to advance, it is transforming industries and reshaping the way we live and work. This guide focuses on the engineering aspects of AI, providing a comprehensive understanding of the fundamental concepts, hardware components, software tools, and real-world applications. By exploring the technical depth of AI, you will gain valuable insights into the current state of the field and its potential impact on various industries. This knowledge will equip you with the expertise needed to design and develop innovative AI solutions that can address complex challenges and drive future progress.

Alan Turing was a pioneering mathematician, logician, and computer scientist who made significant contributions to the development of artificial intelligence. His groundbreaking work laid the foundation for modern computing and AI. Turing’s concept of the “Turing machine” established the theoretical basis for computation and provided a framework for understanding intelligent systems. His insights and ideas continue to shape the field of AI and inspire advancements in machine learning, natural language processing, and problem-solving algorithms. Turing’s visionary contributions have had a profound impact on the way we perceive and harness the power of AI, making him a key figure in the history of artificial intelligence.

Fundamentals of Artificial Intelligence

What is artificial intelligence ?

Artificial Intelligence (AI) is a field of computer science focused on developing intelligent machines that can mimic human intelligence. It encompasses tasks like learning, reasoning, problem-solving, and language understanding. AI systems are categorized into narrow AI, which specializes in specific tasks, general AI, which can perform any human-like intellectual task, and superintelligent AI, which surpasses human intelligence. Explore the fascinating world of AI and its potential for revolutionizing various industries.

Artificial general intelligence (AGI) refers to highly autonomous systems that outperform humans at most economically valuable work. Deep Blue, on the other hand, was a computer chess program developed by IBM that famously defeated world chess champion Garry Kasparov in 1997. While Deep Blue demonstrated impressive computational power and decision-making in a specific domain (chess), it is not considered AGI. Forecasting involves predicting future outcomes based on historical data and statistical models. Language models, such as OpenAI’s GPT-3, are AI systems trained to understand and generate human-like text. While language models like GPT-3 have shown remarkable language capabilities, they are still far from achieving AGI. AGI aims to replicate human-level intelligence across multiple domains, while these specific examples showcase advancements in specific tasks or areas of AI research.

Machine Learning

Machine learning is a critical component of artificial intelligence (AI) that focuses on developing algorithms and models enabling computers to learn from data and make predictions or decisions. It encompasses three main types: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning involves training algorithms on labeled data, where input is paired with the correct output, to learn a mapping for making predictions on new data. Common tasks include regression and classification.

Unsupervised learning deals with unlabeled data, where algorithms discover patterns and relationships independently. Tasks include clustering to group similar data and dimensionality reduction to reduce features while preserving structure.

Reinforcement learning involves an agent learning to make decisions through interactions with an environment, aiming to maximize cumulative rewards over time. It excels in sequential decision-making domains like robotics and game playing.

Discover the power of machine learning in advancing AI and its applications across various industries.

Further Reading: Unsupervised vs Supervised Learning: A Comprehensive Comparison

Deep Learning

Deep learning, a subset of machine learning, utilizes artificial neural networks (ANNs) to model intricate patterns in data. ANNs, inspired by biological neural networks, consist of interconnected layers of artificial neurons. With multiple hidden layers, deep learning models learn hierarchical representations, making them effective for tasks like image recognition, speech processing, and natural language understanding.

Backpropagation, a key concept, trains ANNs by minimizing prediction errors. It computes gradients of the loss function using the chain rule, allowing iterative weight updates and performance improvement.

Deep learning offers advantages like automatic feature learning from raw data, eliminating manual engineering. It achieves state-of-the-art performance in image classification, speech recognition, and natural language processing.

Despite challenges, such as data requirements, computational demands, and interpretability, deep learning has revolutionized AI, driving significant advancements in the field. Explore the potential of deep learning in solving complex AI problems and unlocking new possibilities.

AI Algorithms and Techniques

Artificial intelligence (AI) includes a range of algorithms and techniques that enable machines to learn, reason, and make decisions. Understanding these tools helps engineers develop efficient AI solutions for diverse applications. Harnessing AI’s potential drives innovation across industries.

Search and Optimization Algorithms

Search and optimization algorithms play a crucial role in AI, as they enable the exploration of large solution spaces and the identification of optimal solutions to complex problems. These algorithms are used in a variety of AI tasks, such as pathfinding, scheduling, and game playing, as well as in the training of machine learning models.

Search algorithms are used to explore and navigate complex solution spaces, typically by systematically traversing a graph or tree structure that represents the problem domain. Examples of search algorithms include A* and Dijkstra’s algorithm, which are used for finding the shortest path between nodes in a graph. These algorithms work by expanding the search space in a guided manner, using heuristics or cost functions to prioritize the exploration of promising solution paths.

Optimization algorithms are used to find the best solution to a problem, given a set of constraints and an objective function that measures the quality of a solution. These algorithms search the solution space for the optimal solution, which minimizes or maximizes the objective function, depending on the problem. Examples of optimization algorithms include genetic algorithms and simulated annealing, which are inspired by natural processes and use stochastic search strategies to explore the solution space. Optimization algorithms are widely used in AI for tasks such as parameter tuning, model selection, and feature selection, as well as in various engineering and scientific applications.

Natural Language Processing

Natural Language Processing (NLP) is a subfield of AI that focuses on enabling computers to understand, interpret, and generate human language. It plays a crucial role in AI applications such as chatbots, virtual assistants, and sentiment analysis.

NLP faces challenges due to the complexity and ambiguity of human language. Rule-based methods, statistical models, and deep learning algorithms are employed to tackle these challenges. Rule-based methods use hand-crafted rules to analyze language data, while statistical models leverage probabilistic techniques to model language structure. Deep learning algorithms, like recurrent neural networks and transformers, automatically learn patterns from large-scale language data, achieving state-of-the-art performance.

Understanding the algorithms and techniques in NLP empowers engineers to develop AI systems that can effectively communicate, extract insights from text data, and enable innovative applications. By advancing language understanding, NLP drives the progress of AI, revolutionizing human-computer interaction.

AI Hardware

The hardware components used in AI systems play a critical role in determining the performance, power consumption, and overall capabilities of the system. These components must be carefully selected and optimized to meet the specific requirements and constraints of AI applications. Some of the key hardware components used in AI systems include central processing units (CPUs), graphics processing units (GPUs), and tensor processing units (TPUs), each with its own set of advantages and trade-offs.

CPUs, GPUs, and TPUs

Central processing units (CPUs) are the general-purpose processors found in most computers and electronic devices. They are designed to execute a wide range of instructions and perform complex calculations. While CPUs can be used for AI workloads, they may not be the most efficient option due to their sequential processing architecture and limited parallelism. However, CPUs are highly versatile and can be used for a variety of AI tasks, such as data preprocessing, feature extraction, and model training.

Graphics processing units (GPUs) were originally designed for rendering graphics in video games and other visual applications, but they have since become popular for AI workloads due to their massively parallel architecture and high computational throughput. GPUs are particularly well-suited for deep learning tasks, which involve large-scale matrix operations and require significant parallelism. Some popular GPUs for AI applications include NVIDIA’s GeForce and Tesla series, as well as AMD’s Radeon Instinct series.

Tensor processing units (TPUs) are specialized AI accelerators developed by Google specifically for deep learning tasks. TPUs are designed to perform tensor operations, which are the fundamental building blocks of deep learning models, at high speed and with low power consumption. TPUs offer several advantages over CPUs and GPUs for AI workloads, such as higher performance per watt and lower latency. Google’s Cloud TPU and Edge TPU are examples of TPUs that can be used for AI applications in the cloud and on edge devices, respectively.

When selecting hardware components for an AI system, it is important to consider factors such as processing power, memory capacity, power consumption, and cost, as well as the specific requirements of the AI algorithm and the target device. By carefully balancing these factors, engineers can design and develop AI systems that deliver the desired performance and functionality while minimizing power consumption and cost.

Neuromorphic Computing

Neuromorphic computing is an emerging field that aims to develop hardware architectures and systems inspired by the structure and function of biological neural networks. The goal of neuromorphic computing is to create more efficient and adaptive computing systems that can perform AI tasks with lower power consumption and higher speed compared to traditional AI hardware, such as CPUs, GPUs, and TPUs.

Neuromorphic computing systems typically consist of specialized chips or circuits that mimic the behavior of biological neurons and synapses. These chips can be designed using various technologies, such as complementary metal-oxide-semiconductor (CMOS) transistors, memristors, or even novel materials like carbon nanotubes. By leveraging the inherent parallelism and adaptability of neural networks, neuromorphic computing systems can perform complex AI tasks, such as pattern recognition, sensory processing, and decision-making, with greater efficiency and lower power consumption than traditional AI hardware.

Some notable examples of neuromorphic computing technologies include IBM’s TrueNorth and Intel’s Loihi. IBM’s TrueNorth is a neuromorphic chip that consists of 1 million programmable neurons and 256 million programmable synapses, enabling the implementation of large-scale neural networks with low power consumption. Intel’s Loihi is a neuromorphic research chip that features 128 neuromorphic cores, each containing 1,024 artificial neurons, and supports on-chip learning and adaptation.

Neuromorphic computing has the potential to revolutionize the field of AI hardware by enabling the development of more efficient, adaptive, and scalable AI systems. As neuromorphic computing technology continues to advance, it is expected to play an increasingly important role in the design and implementation of AI systems, particularly in edge computing environments where power efficiency and real-time processing are critical requirements.

AI Software

The development and deployment of AI systems require a variety of software components and tools, including AI frameworks, libraries, operating systems, and development environments. These software tools enable engineers to design, implement, and optimize AI algorithms, taking into account the unique requirements and constraints of the target hardware and application domain. Additionally, AI software must be compatible with a diverse range of hardware components and software platforms, which can add complexity to the development process.

AI Frameworks and Libraries

AI frameworks and libraries simplify the development of AI algorithms by providing pre-built functions and modules. TensorFlow, an open-source framework from Google, offers flexibility and efficiency for AI model building and deployment. PyTorch, developed by Facebook, provides a dynamic computation graph and an intuitive programming model. scikit-learn, a popular Python library, offers algorithms and tools for data preprocessing and model evaluation.

Consider factors such as ease of use, performance, hardware compatibility, and community support when selecting an AI framework or library. The choice impacts the development process and system performance, so ensure it meets application requirements and target hardware.

AI Development Environments

AI development environments are software tools and platforms that facilitate the design, implementation, and deployment of AI algorithms and models. These environments provide a range of features and capabilities to support the AI development process, such as code editing, debugging, version control, and collaboration. By using an AI development environment, engineers can streamline their workflow, improve productivity, and ensure the quality and reliability of their AI solutions.

Some popular AI development environments include Jupyter Notebook, Google Colab, and Microsoft Visual Studio Code. These environments offer various features and capabilities to support the AI development process, such as interactive code execution, visualization, and collaboration.

Jupyter Notebook is an open-source web application that allows users to create and share documents containing live code, equations, visualizations, and narrative text. Jupyter Notebook is widely used in the AI community for tasks such as data exploration, model development, and documentation. It supports multiple programming languages, including Python, R, and Julia, and integrates with popular AI frameworks and libraries, such as TensorFlow, PyTorch, and scikit-learn.

Google Colab is a cloud-based AI development environment that provides a Jupyter Notebook-like interface for writing and executing code in the browser. Colab offers free access to computing resources, including GPUs and TPUs, making it an attractive option for AI developers who require high-performance hardware for training and testing their models. Colab also integrates with Google Drive, allowing users to store and share their notebooks and data with ease.

Microsoft Visual Studio Code is a popular code editor that supports a wide range of programming languages and development tools, including AI frameworks and libraries. Visual Studio Code offers features such as syntax highlighting, code completion, debugging, and version control integration, making it a versatile and powerful tool for AI development. Additionally, Visual Studio Code has a large ecosystem of extensions and plugins, which can further enhance its capabilities and streamline the AI development process.

By leveraging these AI development environments and their associated tools and features, engineers can more effectively design, implement, and deploy AI algorithms and models, ensuring the success of their AI projects and the advancement of the field.

AI Applications

Artificial intelligence has a broad range of applications across various industries, enabling the development of intelligent systems that can learn, reason, and make decisions based on data. These AI-powered systems have the potential to transform industries, improve efficiency, and enhance the quality of life for people around the world. Some of the most promising applications of AI include autonomous vehicles, robotics, and healthcare, among others.

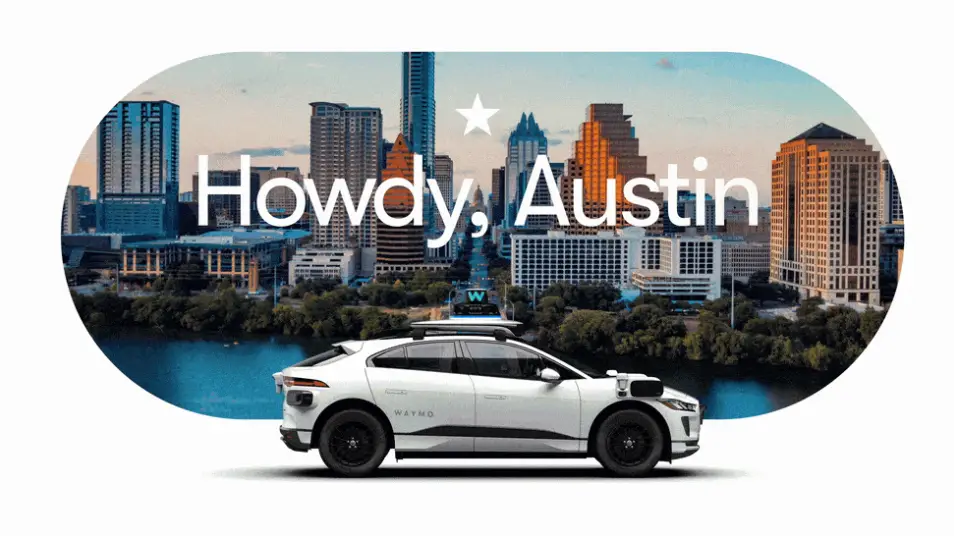

Autonomous Vehicle

AI plays a critical role in the development of autonomous vehicles, such as self-driving cars and drones, by enabling them to perceive their environment, make decisions, and navigate complex situations. AI algorithms and techniques, such as computer vision, sensor fusion, and path planning, are used to process data from various sensors, such as cameras, lidar, and radar, and generate control commands for the vehicle’s actuators.

Computer vision is a key AI technology used in autonomous vehicles to analyze images and video streams from cameras, enabling the vehicle to detect and recognize objects, such as other vehicles, pedestrians, and traffic signs. Deep learning models, such as convolutional neural networks (CNNs), are commonly used for computer vision tasks due to their ability to learn complex patterns and representations in image data.

Sensor fusion is the process of combining data from multiple sensors, such as cameras, lidar, radar, and GPS, to create a more accurate and complete representation of the vehicle’s environment. AI algorithms, such as Kalman filters and particle filters, are used to integrate and process the sensor data, providing a robust and reliable basis for decision-making and control.

Path planning is the process of determining the optimal route for the vehicle to follow, taking into account factors such as the vehicle’s dynamics, the environment, and the desired destination. AI algorithms, such as A* and Dijkstra’s algorithm, are used to search the solution space and find the shortest or most efficient path, while considering constraints such as traffic, obstacles, and road conditions.

The development of autonomous vehicles has the potential to revolutionize transportation, reducing traffic accidents, improving fuel efficiency, and providing new mobility options for people with disabilities. However, there are also significant challenges and ethical considerations associated with the widespread adoption of autonomous vehicles, such as the need for robust safety systems, regulatory frameworks, and public acceptance.

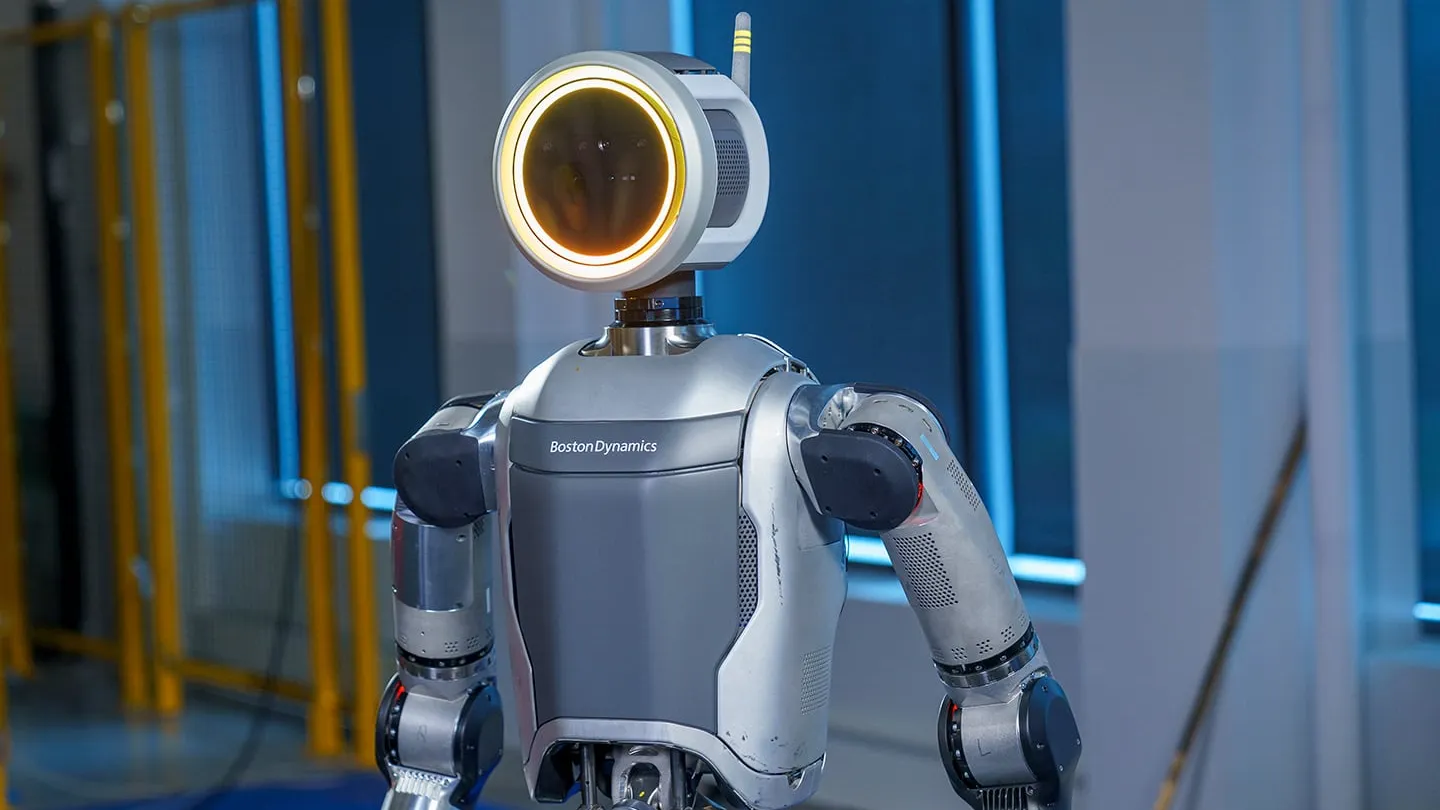

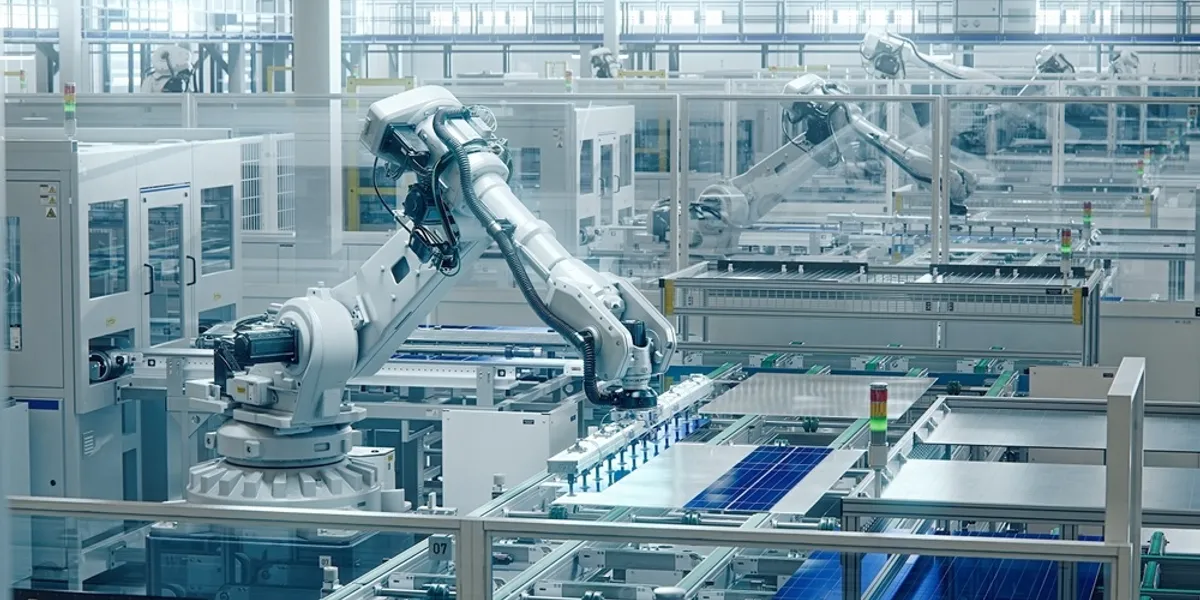

Robotics

Robotics is a multidisciplinary field that combines AI, mechanical engineering, and computer science to design, build, and control robots. These robots can perform a wide range of tasks, from simple repetitive actions to complex, autonomous decision-making and problem-solving. AI plays a crucial role in robotics, enabling robots to perceive their environment, plan their actions, and learn from their experiences.

One of the key AI technologies used in robotics is perception, which involves the processing and interpretation of sensory data, such as images, sounds, and tactile information. Perception enables robots to understand their environment and make decisions based on the available information. AI algorithms, such as computer vision and deep learning, are used to analyze and process sensory data, allowing robots to recognize objects, track their position, and estimate their motion.

Manipulation is another important AI technology in robotics, which involves the control of robotic arms, grippers, and other actuators to perform tasks such as grasping, lifting, and moving objects. AI algorithms, such as inverse kinematics and motion planning, are used to calculate the optimal trajectories and control signals for the robot’s actuators, ensuring smooth and precise movements.

Navigation is a critical AI technology for mobile robots, such as autonomous vehicles and drones, which need to move through their environment while avoiding obstacles and reaching their goals. AI algorithms, such as simultaneous localization and mapping (SLAM) and path planning, are used to estimate the robot’s position, build a map of the environment, and plan the optimal path to the target destination.

In addition to these specific AI technologies, robotics also involves the integration of various hardware and software components, such as sensors, actuators, and control systems, to create a cohesive and functional robot. By leveraging AI algorithms and techniques, engineers can develop more intelligent, adaptable, and autonomous robots that can perform a wide range of tasks and applications, from industrial automation and service robotics to exploration and search-and-rescue missions.

Healthcare

Artificial intelligence (AI) has the potential to revolutionize healthcare by enhancing diagnostics, treatment plans, and patient outcomes. With the use of self-aware algorithms and the application of the theory of mind, AI systems are becoming increasingly sophisticated in understanding and responding to human needs. These systems rely on vast amounts of training data to learn and improve their performance over time.

AI is also transforming healthcare through the development of expert systems, advanced computer programs designed to simulate human expertise in specific domains. These systems can analyze complex medical data, provide accurate diagnoses, and recommend personalized treatment plans. By leveraging the power of AI, healthcare professionals can access valuable insights and make more informed decisions, leading to better patient outcomes.

As the use of AI continues to expand in healthcare, it is important to consider ethical and regulatory considerations. Safeguarding patient privacy, ensuring transparent decision-making processes, and maintaining accountability are crucial aspects of responsible AI implementation. By harnessing the potential of AI in healthcare while addressing these considerations, we can unlock its full potential to revolutionize the industry and improve the lives of patients.

In conclusion, artificial intelligence (AI) has the potential to revolutionize healthcare by improving patient outcomes and enabling more accurate predictions. While AI systems can mimic certain aspects of the human mind, it is important to remember that they are tools designed to augment human expertise, not replace it. By responsibly and ethically utilizing AI, we can unlock opportunities for personalized medicine and efficient healthcare delivery. Embracing AI technologies in collaboration with healthcare professionals, researchers, and policymakers will lead to significant advancements in diagnosis, treatment, and overall patient care. This harmonious integration of technology and human compassion will shape the future of healthcare, positively impacting the lives of many.

Frequently Asked Questions

1. What is the difference between AI, machine learning, and deep learning?

Artificial intelligence (AI) is a broad field that encompasses various techniques and algorithms for creating machines capable of performing tasks that typically require human intelligence. Machine learning is a subset of AI that focuses on the development of algorithms that enable computers to learn from data. Deep learning is a subset of machine learning that uses artificial neural networks to model complex patterns and representations in data.

2. What are some common AI algorithms and techniques?

Some common AI algorithms and techniques include search and optimization algorithms, such as A* and genetic algorithms; machine learning algorithms, such as linear regression and support vector machines; and deep learning algorithms, such as convolutional neural networks and transformers.

3. What are the main components of an AI system?

An AI system typically consists of hardware components, such as CPUs, GPUs, and AI accelerators, as well as software components, such as AI frameworks, libraries, and operating systems. These components work together to execute AI algorithms and process data.

4. What are some applications of AI in various industries?

AI has a wide range of applications across various industries, including healthcare, where it can be used for diagnostics, treatment planning, and drug discovery; manufacturing, where it can be used for predictive maintenance, quality control, and process optimization; and transportation, where it can be used for autonomous vehicles and traffic management.

5. What are the challenges and future prospects of AI?

Some of the challenges in AI include the need for large amounts of labeled data for training, high computational requirements, and the difficulty of interpreting the internal workings of complex models. Despite these challenges, AI has the potential to transform industries and improve our daily lives, driving advancements in areas such as natural language processing, computer vision, robotics, and edge computing.

Conclusion

In this guide, we have explored the engineering principles and applications of artificial intelligence, covering the fundamental concepts, algorithms, techniques, hardware components, and software tools used in AI systems. By understanding the technical depth of AI, you can appreciate its transformative impact on various industries and its potential to reshape our daily lives. As AI technology continues to advance, it will play an increasingly important role in a wide range of applications, from natural language processing and computer vision to robotics and edge computing. By mastering the engineering aspects of AI, you can contribute to the development of innovative AI solutions that address complex challenges and drive future progress.

In conclusion, the field of artificial intelligence (AI) has made significant strides in transforming various industries, including healthcare. The integration of AI technologies, such as generative AI and ChatGPT, has opened new avenues for innovation and problem-solving. While achieving a level of AI that matches the complexity of the human brain remains a goal of science fiction, the practical applications of AI in healthcare are already yielding tangible benefits.

Data science and AI-driven algorithms have enabled the development of powerful applications that can analyze vast amounts of healthcare data, leading to more accurate diagnoses, personalized treatment plans, and improved patient outcomes. The distinction between weak AI, which is designed to perform specific tasks, and strong AI, which emulates human-level intelligence, highlights the potential for further advancements in the field.

As AI continues to advance, it is essential to explore and define ethical guidelines to ensure its responsible use. The use cases of AI in healthcare are vast, ranging from medical imaging and drug discovery to virtual assistants and patient monitoring systems. Embracing the possibilities of AI while carefully addressing concerns such as privacy, security, and bias will pave the way for a future where AI complements human expertise and enhances healthcare practices.

In summary, the intersection of AI, data science, and healthcare presents a promising landscape for transformative advancements. By leveraging the power of AI, we can revolutionize the way healthcare is delivered, improve patient care, and ultimately save lives. It is an exciting time for the field, and with continued research and innovation, we can unlock the full potential of AI to benefit individuals and communities worldwide.

References

[1] (AI) https://www.techtarget.com/searchenterpriseai/definition/AI-Artificial-Intelligence

[2] Artificial intelligence training models https://cloud.google.com/learn/what-is-artificial-intelligence

[3] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444. link