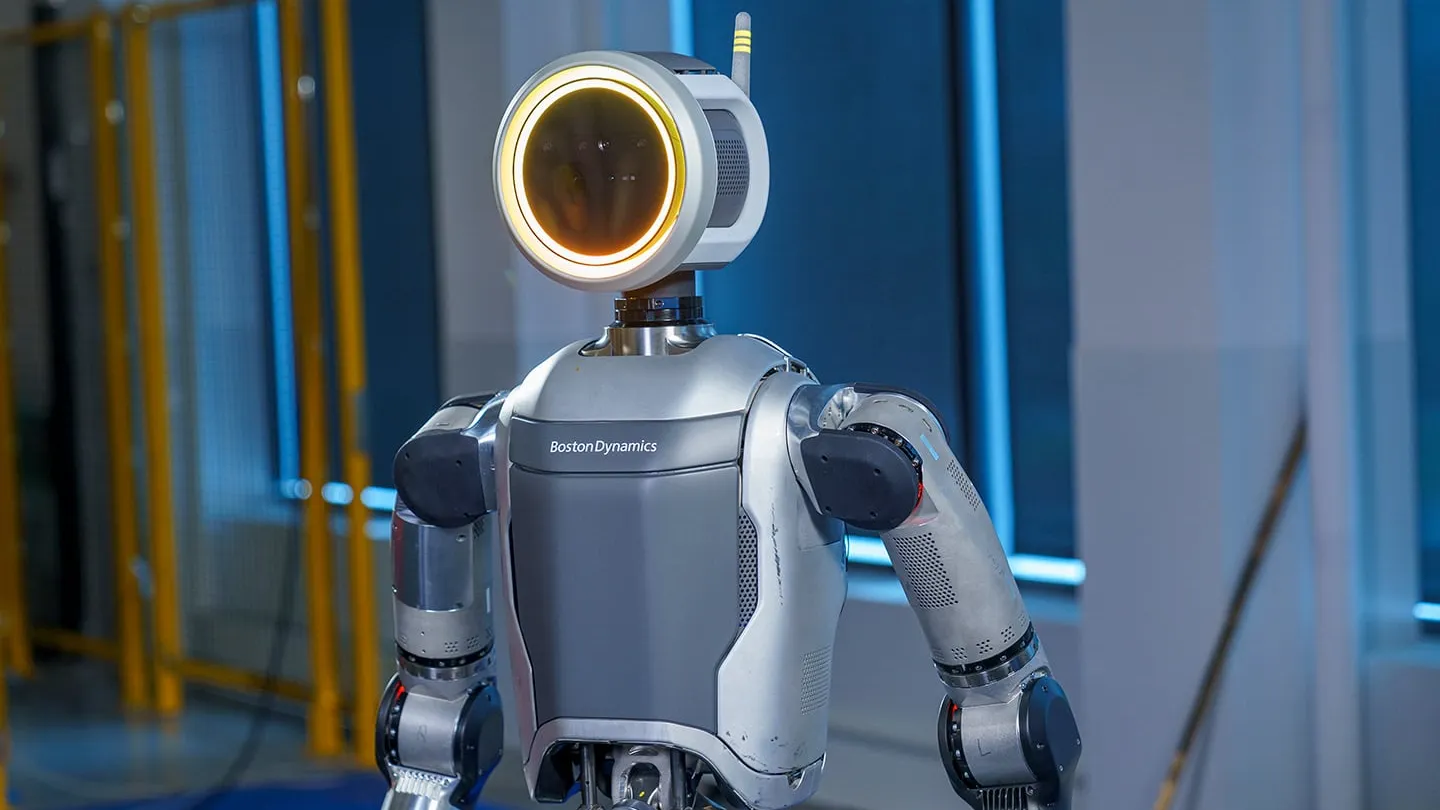

Home robots have faced significant challenges in achieving widespread success beyond the Roomba. Issues such as pricing, practicality, form factor, and mapping have all contributed to numerous failures in the market. Even when these challenges are addressed, there remains a critical question: how should a system respond when it inevitably makes a mistake?

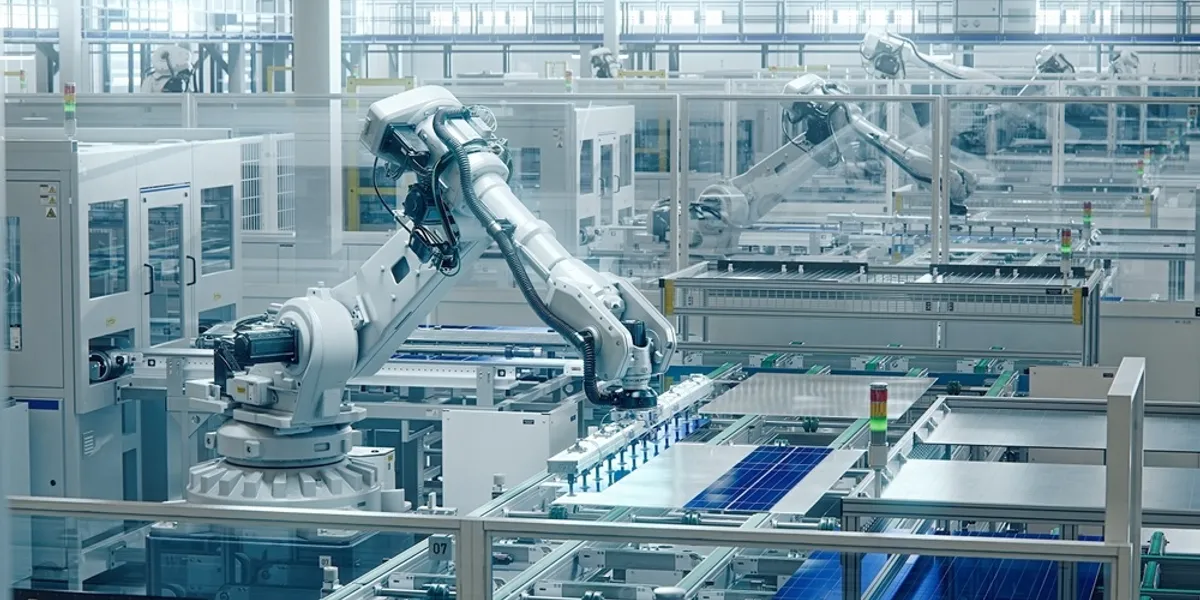

This challenge extends to industrial robotics as well, but large companies have the resources to address problems as they arise. However, expecting consumers to learn programming or hire someone to assist with issues is unrealistic. Fortunately, recent research from MIT demonstrates a promising use case for large language models (LLMs) in robotics.

Current Limitations of Home Robotics

A study scheduled for presentation at the International Conference on Learning Representations (ICLR) in May aims to introduce a bit of “common sense” into the process of correcting robot mistakes.

“Robots are excellent mimics,” explains the school. “But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the beginning.”

Traditionally, when a robot encounters issues, it will exhaust its pre-programmed options before requiring human intervention. This is a significant challenge in unstructured environments like homes, where any changes to the status quo can affect a robot’s ability to function.

Home Cleaning Robots

This is Where LLM Steps In

The researchers note that while imitation learning (learning a task through observation) is popular in home robotics, it often fails to account for the countless small environmental variations that can disrupt regular operation, necessitating a system restart. The new research addresses this by breaking demonstrations into smaller subsets, rather than treating them as part of a continuous action.

This is where LLMs come in, eliminating the need for the programmer to manually label and assign numerous sub-actions.

“LLMs can describe each step of a task in natural language. A human’s continuous demonstration embodies these steps in physical space,” says grad student Tsun-Hsuan Wang. “We wanted to connect the two so that a robot would automatically know what stage it is in a task and be able to replan and recover on its own.”

Franka Emika Cobots

The Method

The study’s demonstration involves training a robot to scoop marbles and pour them into an empty bowl. While this is a simple, repeatable task for humans, it involves various small tasks for robots. The LLMs can list and label these subtasks. In the demonstrations, researchers intentionally disrupted the activity by bumping the robot off course and knocking marbles out of its spoon. The system responded by self-correcting the small tasks, rather than starting over.

“With our method, when the robot makes mistakes, we don’t need to ask humans to program or provide additional demonstrations on how to recover from failures,” Wang adds.

It’s an innovative approach that could help robots avoid losing their marbles—both literally and figuratively.