Introduction to Autonomous Vehicle

Autonomous vehicles, also known as self-driving cars, have the potential to revolutionize transportation by improving safety, reducing traffic congestion, and increasing accessibility for individuals with disabilities who cannot drive. To fully grasp the potential of this technology, it is essential to understand the engineering aspects that enable these vehicles to navigate complex environments without human intervention.

Society of Automotive Engineers (SAE) has classified driving automation into various levels of automation, ranging from Level 0 (no automation) to Level 5 (full automation), representing the extent to which the vehicle can operate without human control of the vehicle.

This article will delve into the below key components of autonomous vehicles that are critical for achieving this automation:

- Sensors and perception systems,

- Localization and mapping,

- Control systems,

- Communication and networking, and

- Cybersecurity and privacy.

By exploring these topics, you will gain a deeper understanding of the engineering principles that underpin the development and operation of autonomous vehicles.

Autonomous Driving Sensors: Revolutionizing Perception Systems

Sensors play a crucial role in vehicle automation, as they collect data from the surrounding environment to enable safe and efficient navigation. There are several types of sensors used in autonomous vehicles, including LiDAR, cameras, and radar. These sensors work together to provide a comprehensive understanding of the vehicle’s surroundings, detect obstacles, identify traffic lights, and tracking other vehicles’ movements. On the other hand, perception systems enables autonomous vehicle technology to recognize and differentiate between pedestrians, vehicles, cyclists, road signs, lane markings, and other critical elements in the environment. By fusing data from multiple sensors and leveraging sophisticated perception algorithms, Autonomous Vehicles can make informed decisions and plan trajectories.

LiDAR

Light Detection and Ranging (LiDAR) is a remote sensing technology that uses laser light to measure distances and create detailed, high-resolution 3D maps of the environment.

In autonomous vehicles, LiDAR sensors emit laser pulses that bounce off the objects and return to the sensor. By measuring the time it takes for the light to travel to the object and back, the sensor can calculate the distance to the object with high accuracy, typically within a few centimeters. This process is repeated thousands of times per second to create a dense point cloud representation of the environment.

LiDAR plays a crucial role in detecting objects and vehicles, creating a local 3D map of the driving environment, collision avoidance, road geometry detection, perception during challenging situations, etc., and eases automated driving. Several advantages that LiDAR offers to autonomous vehicles are:

- It provides accurate depth information through a 360-degree view of the environment. This depth is crucial for object detection, localization, and mapping, enabling automated vehicles to understand the geometry and structure of the surrounding.

- LiDAR can detect objects at long ranges, up to 200 meters or more.

- LiDAR is not affected by ambient light conditions, making it effective in various lighting situations including darkness, fog, and glare, where other sensors fail.

However, LiDAR also has some disadvantages:

- It can be expensive, with high-end sensors costing tens of thousands of dollars.

- LiDAR sensors can be affected by adverse weather conditions, such as heavy rain or snow, which can reduce their performance.

- It cannot measure long distances like a radar.

- It lacks the ability to create high-resolution images like a camera, which is relatively cheaper.

In autonomous vehicles, LiDAR is often used in combination with other sensors to create high-resolution maps for navigation. These maps provide detailed information about the vehicle’s surroundings, including the position of other vehicles, pedestrians, and obstacles. By fusing LiDAR data with information from cameras and radar, autonomous vehicles can achieve a more comprehensive understanding of their environment, enabling them to make better decisions and navigate more safely.

Cameras

Cameras are another essential sensor type used in autonomous vehicles that offer low-cost solutions compared to LiDAR. They capture visual information from the environment, providing valuable data for object detection, recognition, and tracking.

Autonomous vehicles, also known as driverless vehicles, rely on cameras placed on every side — front, rear, left and right — to stitch together a 360-degree view of their environment. Cameras in autonomous vehicles typically use high-resolution image sensors, often in the range of 8 to 16 megapixels (compared to a human’s natural 576 megapixels), to capture detailed images of the surroundings. These images are then processed by advanced computer vision algorithms to identify and classify objects, such as vehicles, pedestrians, traffic signs, and lane markings.

Cameras not only detect objects but also classify them as vehicles, pedestrians, traffic signals, etc. Furthermore, they help in road and lane detection along with lane markings, traffic signs (roadway signs) and traffic lights, side mirror view, etc. In this way, cameras assist human operators in driving automated vehicles.

Tesla’s innovative approach to autonomous driving utilizes camera-only scenarios, demonstrating its commitment to advancing self-driving technology.

The main advantages of cameras in autonomous vehicles are:

- Their ability to capture rich visual information, including color and texture, can be useful for tasks like traffic sign recognition and lane detection.

- Cameras are also relatively inexpensive compared to other sensor types, such as LiDAR, making them a cost-effective solution for many applications.

- By incorporating inexpensive cameras positioned at various angles on the vehicle, cars can achieve a complete 360° view of their external surroundings.

However, cameras also have some limitations:

- They are sensitive to lighting conditions, which means that their performance can be affected by factors such as rain, fog, snow, glare, shadows, and low light levels.

- Cameras have a limited range compared to LiDAR and radar, typically around 100 meters, which can be insufficient for detecting objects at high speeds or in complex environments.

- It takes 4-6 cameras around the vehicle to get realistic images. However, this amount of data proves large to be processed and requires a significant amount of hardware.

In autonomous vehicles, cameras are often used in conjunction with other sensors, such as LiDAR and radar, to provide a more comprehensive understanding of the environment. By combining the visual information from cameras with distance measurements from LiDAR and radar, autonomous vehicles can achieve a higher level of situational awareness, enabling them to make better decisions and navigate more safely.

Radar

Radar (Radio Detection and Ranging) is another essential sensor technology used in autonomous vehicles.

Radar operates by emitting radio waves (compared to LiDAR’s light waves) that bounce off objects and return to the sensor, allowing the system to measure the distance, speed, and direction of objects in the vehicle’s surroundings. Radar sensors can detect objects at long ranges, typically up to 250 meters, and are less affected by adverse weather conditions, such as rain, fog, or snow, compared to LiDAR and cameras. It is utilized by speed detector vehicles to apprehend vehicles exceeding the speed limit.

Object detection, distance measurement, adaptive cruise control (ACC) & collision avoidance, blind spot detection, etc. are a few of the applications that radar contributes to, in driver assistance systems.

Radar offers several advantages in autonomous vehicles, the main ones being:

- It can detect objects at long ranges which helps vehicles to maintain a safe distance while driving.

- It is less sensitive to environmental conditions, making it a reliable sensor in various situations.

- Radar sensors are generally more affordable than LiDAR sensors, making them a cost-effective option for vehicle manufacturers.

However, radars also have some limitations, including:

- It provides lower-resolution data compared to LiDAR, making it less suitable for detailed mapping and object recognition tasks.

- Radar sensors can be affected by interference from other radar systems or electronic devices, which may reduce their performance.

- While capable of determining speed and distance, it does not have the ability to differentiate between different types of vehicles.

In autonomous vehicles, radar sensors are often used in combination with other sensors, such as LiDAR and cameras, to provide a comprehensive understanding of the vehicle’s environment. Radar is particularly useful for detecting large, metallic objects, such as other vehicles, and measuring their speed and distance. By fusing radar data with information from other sensors, autonomous vehicles can achieve a more accurate and reliable perception of their surroundings, enabling them to make better decisions and navigate more safely.

Further Reading: Lidar vs. Radar: Comprehensive Comparison and Analysis

Localization and Mapping Automation: Empowering Efficiency

Once the sensors, such as LiDAR, cameras, and radar, gather data about the environment, the autonomous vehicle uses this information to create a map of its surroundings. Simultaneously, it determines its own position within that map, a process known as localization.

By combining sensor data with advanced algorithms, vehicle technology can accurately understand its location and surroundings, enabling it to navigate autonomously.

Localization and mapping are critical components of autonomous vehicles, as they enable the vehicle to understand its position within the environment and plan its route accordingly. Localization is performed using the technologies such as GPS and inertial measurement units (IMUs).

Mapping involves creating a detailed representation of the environment, including roads, buildings, and other features, which the automated vehicles use to navigate safely and efficiently. Simultaneous Localization and Mapping (SLAM) is a technique that combines these two processes, allowing the vehicle to build a map of its surroundings while simultaneously determining its position within that map.

GPS and GNSS

For a fully autonomous vehicle to operate effectively, it requires a precise localization solution that is both accurate and reliable. GNSS (Global Navigation Satellite System) technology can provide the necessary level of accuracy, down to the decimeter level, to ensure that the vehicle stays within its designated lane and maintains a safe distance from other vehicles.

Global Positioning System (GPS) and Global Navigation Satellite System (GNSS) are satellite-based navigation systems that provide precise positioning information for autonomous cars. GPS, developed by the United States, is the most well-known GNSS, while other GNSS systems include Russia’s GLONASS, Europe’s Galileo, and China’s BeiDou. These systems use a network of satellites orbiting the Earth to transmit signals that allow receivers on the ground to calculate their position with high accuracy, typically within a few meters.

The working principle of GPS and GNSS in autonomous vehicles involves receiving signals from multiple satellites and using the time it takes for the signals to travel from the satellite to the receiver to calculate the distance to each satellite. By knowing the position of the satellites and the distances to them, the receiver can determine their precise location using a process called trilateration. GPS and GNSS provide continuous positioning information, allowing the vehicle to track its movement and update its position in real-time. Besides, it primarily contributes to vehicle navigation and route planning.

GPS and GNSS offer several advantages for autonomous vehicles:

- They provide global coverage, enabling vehicles to determine their position anywhere on Earth.

- They offer high accuracy, with positioning errors typically in the range of 1 to 5 meters, depending on the system and environmental conditions.

- GNSS systems provide continuous updates on the vehicle’s position, velocity, and time. This information is essential for autonomous cars to make informed decisions and adjust their behavior accordingly.

However, GPS and GNSS also have some limitations:

- Their performance can be affected by factors such as signal blockage or multipath interference, which can occur in urban environments with tall buildings or in areas with dense foliage.

- GPS and GNSS signals can be disrupted by atmospheric conditions or interference from other electronic devices.

- GNSS relies on a network of satellites and ground-based infrastructure, making it dependent on the availability and reliability of these systems. Any disruptions or outages can impact the vehicle’s ability to navigate accurately.

In autonomous vehicles, GPS and GNSS are often used in combination with other localization technologies, such as IMUs and LiDAR, to provide more accurate and reliable positioning information. By fusing data from multiple sources, the vehicle can achieve a higher level of localization accuracy, enabling it to navigate more safely and efficiently.

Inertial Measurement Units (IMUs)

Inertial Measurement Units (IMUs) are critical components in autonomous vehicles, providing information about the vehicle’s motion, orientation, and acceleration. IMUs consist of accelerometers, gyroscopes, and sometimes magnetometers, which work together to measure linear acceleration, angular velocity, and magnetic field strength, respectively. IMU is more useful in situations where GNSS signals may be obstructed or unavailable, such as in tunnels, urban canyons, or dense foliage. IMUs can provide continuous motion tracking even when GNSS signals are temporarily lost.

Accelerometers measure linear acceleration along three axes (x, y, and z), while gyroscopes measure angular velocity around these axes. By integrating the data from these sensors, an IMU can estimate the vehicle’s position, velocity, and orientation over time. Magnetometers, when included, can provide additional information about the vehicle’s orientation by measuring the Earth’s magnetic field.

Imagine an autonomous vehicle driving on a curvy mountain road. The IMU here continuously measures the vehicle’s linear acceleration, detecting any changes in speed or direction. This data helps the automated vehicle’s control system adjust the steering, braking, and acceleration to maintain stability and ensure a smooth ride.

Additionally, the IMU’s gyroscopes measure the AV’s angular velocity, providing information about its rotational movement. This helps the autonomous vehicle’s control system make precise steering adjustments, especially when navigating sharp turns or avoiding obstacles.

IMUs offer several advantages in autonomous vehicles:

- They provide high-frequency data, typically in the range of 100 to 1000 Hz, allowing for precise motion tracking and control.

- IMUs are not affected by environmental factors, such as lighting conditions or weather, making them a reliable source of information in various situations.

However, IMUs also have some limitations:

- They are prone to drift and accumulated errors over time, which can lead to inaccuracies in position and orientation estimates.

- IMUs primarily measure acceleration and angular rates, but they do not directly provide information about the vehicle’s surroundings or the presence of obstacles.

To mitigate these errors, IMUs are often used in combination with other localization technologies, such as GPS or GNSS. In autonomous vehicles, IMUs contribute to localization and mapping by providing continuous information about the vehicle’s motion and orientation. This data is essential for tasks such as dead reckoning, where the vehicle estimates its position based on its previous position and motion data. By fusing IMU data with information from other sensors, such as GPS, LiDAR, and cameras, driverless cars can achieve more accurate and robust localization and mapping, enabling them to navigate complex environments more effectively.

Simultaneous Localization and Mapping (SLAM)

Simultaneous Localization and Mapping (SLAM) is a technique used in self-driving vehicles to build a map of the environment while simultaneously determining the vehicle’s position within that map. SLAM is particularly useful in situations where GPS or GNSS signals are unavailable or unreliable, such as in urban canyons, tunnels, or indoor environments. SLAM algorithms combine data from various sensors, such as LiDAR, cameras, and IMUs, to create a detailed representation of the environment and estimate the vehicle’s position and orientation.

The working principle of SLAM involves two main steps:

- Feature extraction: In the feature extraction step, the SLAM algorithm processes sensor data to identify distinctive features in the environment, such as corners, edges, or landmarks. These features are then used to build a map of the environment.

- Data association: In the data association step, the algorithm matches the observed features with those in the existing map to determine the vehicle’s position and orientation relative to the map.

SLAM offers several advantages for autonomous vehicles:

- It enables vehicles to operate in environments where GPS or GNSS signals are not available or reliable, providing a robust solution for localization and mapping.

- SLAM algorithms can adapt to changes in the environment, updating the map as new features are observed or as existing features change. This adaptability is particularly useful in dynamic environments, such as urban settings, where the vehicle must navigate around moving objects and deal with changing traffic conditions.

However, SLAM also has some limitations:

- It can be computationally intensive, requiring significant processing power to handle large amounts of sensor data and perform complex calculations.

- SLAM algorithms can be sensitive to errors in sensor measurements or feature extraction, which can lead to inaccuracies in the map or the vehicle’s estimated position.

In autonomous vehicles, SLAM is often used in combination with other localization and mapping technologies, such as GPS and GNSS, to provide a more accurate and reliable solution for navigation. By fusing data from multiple sources, the vehicle can achieve a higher level of localization accuracy and build a more detailed and up-to-date map of its environment, enabling it to navigate more safely and efficiently.

Further Reading: Lidar SLAM: The Ultimate Guide to Simultaneous Localization and Mapping

Control Systems

Control systems are a vital component of self-driving vehicles, responsible for managing the vehicle’s motion and ensuring safe and efficient navigation. It ensures that the motor vehicle operates safely and efficiently after gathering information from the localization and mapping processes and uses it to make decisions and control the vehicle’s movements. By analyzing the environment, detecting obstacles, and considering the desired trajectory, the control system guides the autonomous vehicle in real time, adjusting its speed, acceleration, and steering to navigate the road and reach its destination. These systems take input from various sensors and use algorithms to determine the appropriate actions, such as accelerating, braking, or steering.

There are two primary types of control systems in autonomous vehicles: longitudinal control, which manages the vehicle’s speed and acceleration, and lateral control, which manages the vehicle’s steering and lane-keeping.

Longitudinal Control

Longitudinal control in automated driving systems focuses on managing the vehicle’s speed and acceleration to maintain a safe and comfortable driving experience without requiring intervention from a human driver. This control system takes input from various sensors, such as LiDAR, radar, and cameras, to detect the vehicle’s surroundings and determine the appropriate speed and acceleration based on factors such as traffic conditions, road geometry, and speed limits.

One of the key aspects of longitudinal control is adaptive cruise control (ACC), which automatically adjusts the vehicle’s speed to maintain a safe following distance from the vehicle ahead. ACC systems use sensors, such as radar or LiDAR, to measure the distance and relative speed of the motor vehicle in front, and then adjust the vehicle’s acceleration or deceleration to maintain a predefined gap. Some advanced ACC systems can even bring the vehicle to a complete stop and resume driving when the traffic starts moving again.

Another important aspect of longitudinal control is collision avoidance, where the control system detects potential obstacles and takes evasive actions, such as braking or changing lanes, to avoid a collision. This functionality relies on advanced algorithms that process sensor data to predict the future positions of surrounding objects and determine the safest course of action.

In automated driving systems, longitudinal control contributes to vehicle safety and performance by ensuring that the vehicle maintains a safe speed and following distance, while also reacting to potential hazards in the environment. By combining data from multiple sensors and using advanced control algorithms, longitudinal control systems can provide a smooth and comfortable driving experience by automating the driving task, while also reducing the risk of accidents.

Lateral Control

Lateral control in autonomous vehicles focuses on managing the vehicle’s steering and lane-keeping to ensure safe and accurate navigation. As autonomous vehicles continue to advance, the traditional steering wheel, once an essential component of driving, is being replaced by advanced control systems that enable fully automated navigation.

This control system takes input from various sensors, such as cameras, LiDAR, and GPS, to detect the vehicle’s position within the lane, the curvature of the road, and the presence of other vehicles or obstacles. Based on this information, the lateral control system adjusts the vehicle’s steering angle to maintain the desired trajectory and stay within the lane boundaries.

One of the key aspects of lateral control is lane-keeping assistance (LKA), which similar to an autopilot system, automatically adjusts the vehicle’s steering to help the driver stay within the lane markings. LKA systems use cameras or other sensors to detect the lane boundaries and determine the vehicle’s position relative to them. If the vehicle starts to drift out of its lane, the LKA system gently adjusts the steering to guide the vehicle back to the center of the lane.

Another important aspect of lateral control is lane change assistance, where the control system detects the presence of other vehicles or obstacles in adjacent lanes and assists the driver in making safe lane changes. This functionality relies on sensors, such as cameras, LiDAR, or radar, to monitor the vehicle’s surroundings and determine the appropriate timing and steering adjustments for a safe lane change.

In autonomous vehicles, lateral control contributes to vehicle safety and performance by ensuring that the vehicle stays within its lane and navigates curves accurately, while also assisting the driver in making safe lane changes. By combining data from multiple sensors and using advanced control algorithms, lateral control systems can provide a smooth and comfortable driving experience, while also reducing the risk of vehicle crashes caused by human errors or other factors.

Further Reading: Predictive Model of Adaptive Cruise Control Speed to Enhance Engine Operating Conditions

Communication and Networking Automation: Connecting the Road Ahead

Communication and networking play a crucial role in autonomous vehicles, enabling them to exchange information with other vehicles, infrastructure, and devices to enhance safety and performance. By sharing data about their position, speed, and intentions, vehicles can coordinate their actions to avoid collisions, optimize traffic flow, and improve overall efficiency. There are several communication technologies used in autonomous vehicles, such as Vehicle-to-Everything (V2X) communication and 5G networks, which provide the necessary connectivity for these advanced features.

Vehicle-to-Everything (V2X) Communication

Vehicle-to-Everything (V2X) communication is a technology that allows autonomous vehicles to exchange information with other vehicles (V2V), infrastructure (V2I), pedestrians (V2P), and networks (V2N). V2X communication enables a wide range of applications, such as cooperative collision avoidance, traffic signal optimization, and real-time traffic information sharing, which can enhance safety, efficiency, and convenience for drivers and passengers.

For example, this information enhances the driver’s awareness of things like road conditions, public road works notices, nearby accidents, approaching of emergency vehicles, and activities of other drivers on the same route. It also intimates the drivers about any potential dangers and helps to diminish the likelihood of road accidents and injuries. The technology also improves traffic efficiency by informing drivers about any upcoming traffic jams and suggesting alternative routes to reach their destination.

V2X communication typically uses Dedicated Short-Range Communications (DSRC) or Cellular Vehicle-to-Everything (C-V2X) technology to transmit data between vehicles and other entities.

DSRC is a wireless communication standard that operates in the 5.9 GHz frequency band, providing low-latency, high-reliability communication with a range of up to 1 km.

C-V2X, on the other hand, leverages cellular networks, such as 4G LTE or 5G, to provide longer-range, higher-capacity communication.

Below are the main advantages of V2X communication:

- Its ability to provide vehicles with a more comprehensive understanding of their environment, beyond the line-of-sight limitations of onboard sensors.

- By sharing data with other vehicles and infrastructure, V2X-equipped vehicles can anticipate potential hazards, such as vehicles approaching from blind spots or pedestrians crossing the street, and take appropriate actions to avoid collisions.

- V2X communication can enable vehicles to coordinate their movements, such as forming platoons to reduce fuel consumption or optimizing traffic signal timings to minimize congestion.

However, V2X communication also faces some challenges: such as

- Due to being on the internet, it is prone to hacking.

- The need for widespread deployment of compatible infrastructure and devices, as well as concerns about cybersecurity and privacy.

Despite these challenges, V2X communication is expected to play a critical role in the future of autonomous vehicles, enabling them to operate more safely and efficiently in complex, dynamic environments.

5G and Beyond

5G, the fifth generation of mobile networks, has the potential to significantly impact autonomous vehicles by providing faster and more reliable communication capabilities. With its low latency, high data rates, and increased capacity, 5G can enable real-time communication between vehicles, infrastructure, and other devices, enhancing the overall performance and safety of autonomous vehicles.

The working principle of 5G involves using a combination of higher frequency bands, advanced antenna technologies, and network slicing to provide faster and more reliable communication. 5G networks can achieve data rates up to 10 Gbps, which is 100 times faster than 4G, and latency as low as 1 millisecond, enabling near-instantaneous communication between devices. This low latency is particularly important for autonomous vehicles, as it allows them to react more quickly to changes in their environment and make better decisions.

The advantages of 5G in autonomous vehicles include:

- Improved vehicle-to-everything (V2X) communication, enhanced sensor data processing, and support for advanced applications, such as remote driving and platooning.

- With 5G, vehicles can share more data with each other and with infrastructure, enabling them to coordinate their movements more effectively and avoid potential hazards.

- 5G can support the processing of large amounts of sensor data in real time, allowing vehicles to build more accurate and up-to-date maps of their environment.

However, there are also some challenges associated with the implementation of 5G in autonomous vehicles:

- Deploying 5G infrastructure, such as small cells and antennas, can be expensive and time-consuming, particularly in urban areas where coverage is most needed.

- 5G networks may be vulnerable to interference or congestion, which could impact the performance and reliability of communication between vehicles and other devices.

As the development of 5G and future communication technologies continues, it is expected that these advancements will play a crucial role in the evolution of autonomous vehicles. By providing faster and more reliable communication capabilities, 5G and beyond can enable vehicles to share more data, make better decisions, and ultimately, navigate more safely and efficiently.

Further Reading: Designing C-V2X Communication Systems: Key Engineering Considerations and Best Practices

Securing Autonomous Vehicles: Cybersecurity and Privacy

Cybersecurity and privacy are critical concerns in the development and deployment of autonomous vehicles. As these vehicles rely on complex networks of sensors, communication systems, and control algorithms, they can be vulnerable to various cybersecurity threats, such as hacking, data breaches, and denial-of-service attacks. Ensuring the security and privacy of autonomous vehicles is essential to protect the safety and trust of users, as well as to comply with regulations and industry standards.

One of the primary cybersecurity threats facing autonomous vehicles is the potential for unauthorized access to the vehicle’s control systems. Hackers could exploit vulnerabilities in the vehicle’s software or communication protocols to gain control over critical functions, such as steering, acceleration, or braking. This could lead to dangerous situations, such as collisions or loss of control. To mitigate this risk, vehicle manufacturers and suppliers must implement robust security measures, such as secure software development practices, encryption, and intrusion detection systems, to protect the vehicle’s control systems from unauthorized access.

Another cybersecurity concern is the potential for data breaches, as autonomous vehicles generate and transmit large amounts of data, including personal information about the vehicle’s occupants and their travel habits. Ensuring the privacy of this data is essential to comply with data protection regulations, such as the General Data Protection Regulation (GDPR) in the European Union, and to maintain the trust of users. To protect the privacy of user data, vehicle manufacturers and service providers must implement strong data encryption, access controls, and data minimization techniques, as well as provide transparent information about how the data is collected, used, and shared.

In addition to cybersecurity threats, autonomous vehicles also face challenges related to the privacy of their users. For example, vehicles equipped with cameras or other sensors may inadvertently capture images or data about individuals who are not occupants of the vehicle, raising concerns about surveillance and privacy. To address these concerns, vehicle manufacturers and service providers must develop privacy-preserving technologies, such as anonymization, data masking, and differential privacy, to protect the privacy of individuals who may be affected by the vehicle’s data collection activities.

In conclusion, ensuring the cybersecurity and privacy of autonomous vehicles is a critical aspect of their development and deployment. By implementing robust security measures and privacy-preserving technologies, vehicle manufacturers and service providers can protect the safety and trust of users, comply with regulations, and contribute to the successful adoption of autonomous vehicles in society.

Further Reading: Security analysis of camera-LiDAR perception for autonomous vehicle system

Conclusion

Understanding the engineering aspects of autonomous vehicles is essential for their successful implementation and adoption. This article has explored the critical components of autonomous vehicles, including sensors and perception systems, localization and mapping, control systems, communication and networking, and cybersecurity and privacy. By delving into these topics, we have gained a deeper understanding of the engineering principles that underpin the development and operation of autonomous vehicles. As technology continues to advance, it is crucial to address the challenges and opportunities presented by these engineering aspects to ensure the safe, efficient, and widespread deployment of autonomous vehicles in our society.

Frequently Asked Questions

-

What is ADAS? (FAQs)

ADAS (Advanced Driver Assistance Systems) refers to a set of technologies and features designed to assist drivers in the operation and control of a vehicle. ADAS utilizes various sensors, cameras, radar, and other detection devices to monitor the vehicle’s surroundings, detect potential hazards, and provide real-time feedback to the driver or even intervene in certain situations.

-

How do autonomous vehicles determine their position and create maps of their environment?

Autonomous vehicles use technologies such as GPS, GNSS, IMUs, and SLAM to determine their position and create maps of their environment. These technologies enable the vehicle to understand its location within the environment and plan its route accordingly.

-

What is the difference between an autonomous car and a driverless car?

A driverless car specifically implies a vehicle that operates entirely without a human driver and does not require human intervention at any level. Whereas, an autonomous car refers to a vehicle that utilizes artificial intelligence (AI) and advanced technologies to operate without direct human input or intervention in certain driving tasks. They typically have different levels of automation, ranging from Level 1 to Level 5.

-

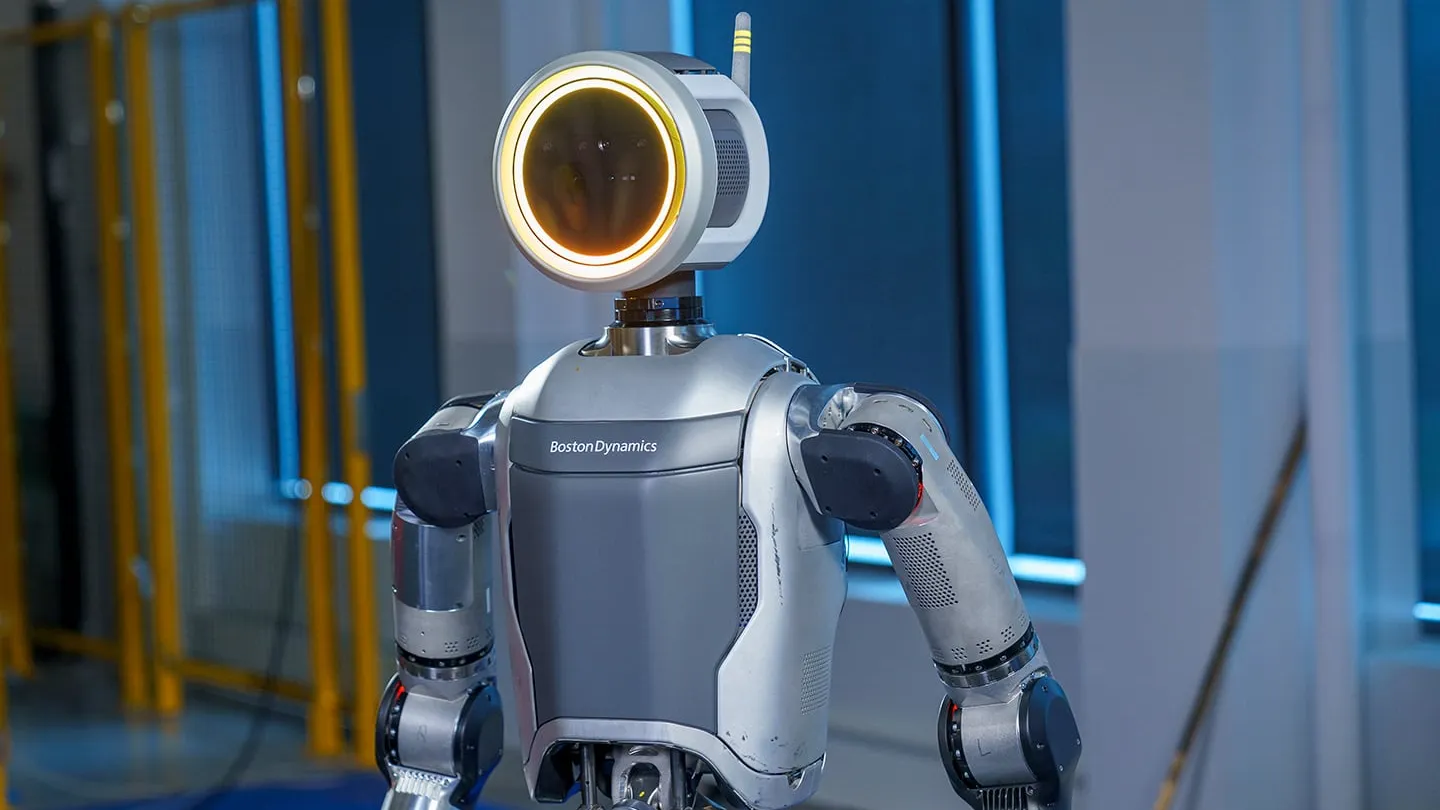

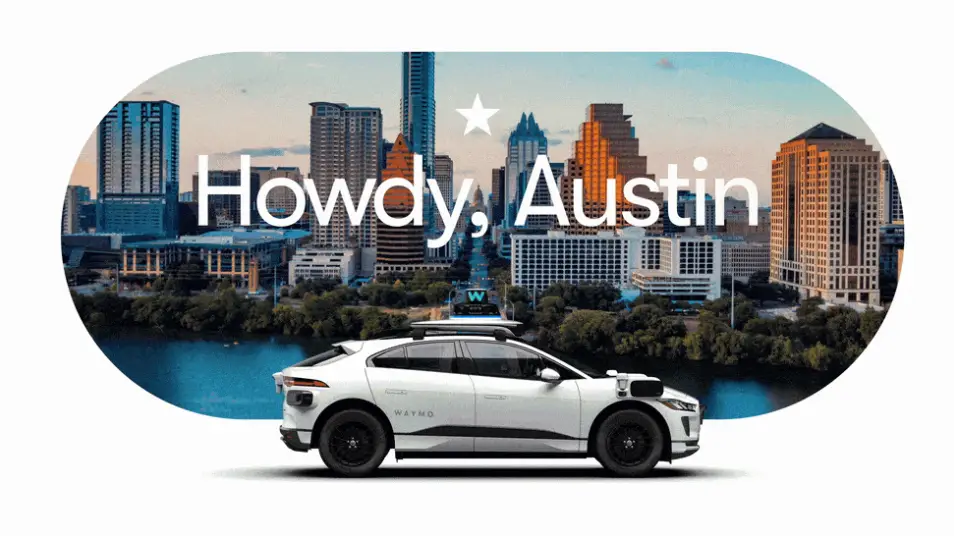

What are examples of autonomous car?

Waymo, Tesla, Nuro R2, and Baidu are a few of the autonomous vehicles that have autonomous cars at different levels. While Waymo (earlier known as Google’s self-driven car project), China’s Baidu, and Nuro R2 operate at the auautomation of Level 4, Tesla has achieved Level 2 automation. However, achieving full Level 5 autonomy in all driving conditions and locations remains an ongoing challenge.

-

What are the main cybersecurity and privacy concerns for autonomous vehicles?

The main cybersecurity concerns for autonomous vehicles include unauthorized access to the vehicle’s control systems, data breaches, and denial-of-service attacks. Privacy concerns include the potential for surveillance and the need to protect personal information about the vehicle’s occupants and their travel habits.

References

https://studentsxstudents.com/